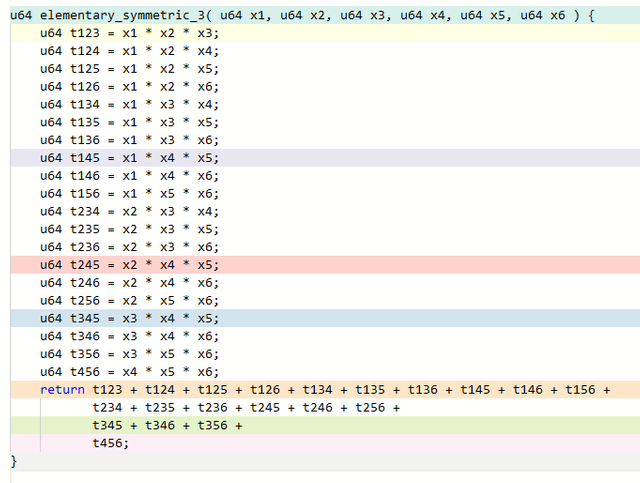

Today's experiment with the Compiler Explorer: let's compute an elementary symmetric polynomial:

This is the sum of all 3-element products of the input variables. This is an example where we can see a significant difference between two C++ compilers, clang (LLVM) version 6.0.0 and gcc 8.1.1:

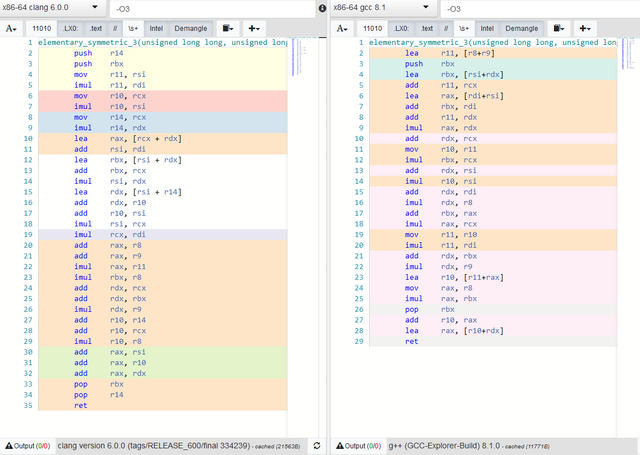

The calling convention (ABI) both compilers are using puts x1 in rdi, x2 in rsi, x3 in rdx, x4 in rcx, x5 in r8, and x6 in 'r9'. With that we can figure out what the two compilers are actually doing.

Clang (on the left) first saves a couple of callee-saved registers it will use for temporary space. The convention is that a function must restore these to their original state before returning (which is does with the pop instructions on lines 33 and 34.) A caller-saved register, on the other hand, can be freely overwritten by the function; all the registers used for arguments, r10, and r11 fall into this category. rax is the register used for the return value, so it can be used for working space as well.

We can work through the assembly using "symbolic execution": just remember, for each register, what it is in terms of the input arguments.

| Lines | effect |

|---|---|

| start | rdi = x1, rsi=x2, rdx=x3, rcx=x4, r8=x5, r9=x6 |

| 4-5 | r11 = x1 * x2 |

| 6-7 | r10 = x2 * x4 |

| 8-9 | r14 =x3 * x4 |

| 10 | rax = x3 + x4 |

| 11 | rsi = x1 + x2 |

| 12-13 | rbx = x1 + x2 + x3 +x4 |

| 14 | rsi = (x1+x2)*x3 |

| 15-16 | rdx = rsi + r14 + 410 = x1x3 + x2x3 + x3x4 + x2x4 |

| 17 | r10 = r10 + rsi = x1x3 + x2x3 + x2x4 |

| 18 | rsi = rsi * rcx = x1x2x4 + x1x3x4 |

| 19 | rcx = rcx * rdi = x1x4 |

| 20-21 | rax = x3 + x4 + x5 + x6 |

| 22 | rax = (x3+x4+x5+x6) * (x1 * x2) = x1x2x3 + x1x2x4 + x1x2x5 + x1x2x6 |

| 23 | rbx = rbx * r8 = (x1+x2+x3+x4) * x5 |

| 24-25 | rdx = rdx + rcx + rbx = x1x3 + x1x4 + x1x5 + x2x3 + x2x4 + x2x5 + x3x4 + x3x5 + x4x5 |

| 26 | rdx = rdx * x9 = x1x3x6 + x1x4x6 + x1x5x6 + x2x3x6 + x2x4x6 + x2x5x6 + x3x4x6 + x3x5x6 + x4x5x6 |

| 27-28 | r10 = r10 + r14 + rcx = x1x3 + x2x3 + x2x4 + x3x4 + x1x4 |

| 29 | r10 = r10 * x5 = x1x3x5 + x1x4x5 + x2x3x5 + x2x4x5 + x3x4x5 |

| 30 | rax = rax + rsi + r10 + rdx |

So we calculated:

- the 4 terms containing x1x2 in RAX (line 22): x1x2(x3+x4+x5+x6)

- the 2 remaining terms lacking both x5 and x6 in RSI: x1(x2+x3)x4

- the 5 terms without x6 in R10: ((x1+x2)x3 + x1x4 + x2x4 + x3x4)x5

- the 9 remaining terms containing x6 in RDX: ((x1+x2)x3 + x1x4 + x2x4 + x3x4 + (x1+x2+x3+x4)x5)x6

This takes a total of 10 multiplications; GCC (on the right above) somehow manages to do it in two fewer, and uses one fewer temporary variable. GCC also interleaves the instructions to a greater extent

| Lines | effect |

|---|---|

| start | rdi = x1, rsi=x2, rdx=x3, rcx=x4, r8=x5, r9=x6 |

| 2, 5, 8 | r11 = x3 + x4 + x5 + x6 |

| 4, 7 | rbx =x1 + x2 + x3 |

| 6 | rax = x1 + x2 |

| 9 | rax = (x1+x2)*x3 |

| 10, 13, 15 | rdx = x1 + x2 + x3 + x4 |

| 11,14 | r10 = x2*(x3 + x4 + x5 + x6) |

| 12 | rbx = rbx * rcx = (x1+x2+x3)*x4 |

| 16 | rdx = (x1+ x2 +x3 + x4)*x5 |

| 17 | rbx = rbx + rax = (x1+x2)x3 + (x1+x2+x3)x4 |

| 18 | rax = rax * rcx = (x1+x2)x3x4 |

| 19-20 | r11 = r10 * rdi = x1x2(x3+x4+x5+x6) |

| 21-22 | rdx = ( rdx + rbx ) * r9 = (x1+x2)x3x6 + (x1+x2+x3)x4x6 + (x1+ x2 +x3 + x4)x5x6 |

| 23 | r10 = r11+ rax = (x1+x2)x3x4 + x1x2(x3+x4+x5+x6) |

| 24-25 | rax = r8 * rbx = (x1+x2)x3x5 + (x1+x2+x3)x4x5 |

| 27-28 | rax = rax + r10 + rdx |

This logic, in addition to requiring fewer multiplies, is actually a bit easier to follow:

- 9 terms with x6 are all finished on line 21-22: (x1+x2+x3+x4)x5x6 + (x1+x2+x3)x4x6 + (x1+x2)x3x6

- 4 terms with x1x2 are calculated on line 19-20: x1x2(x3+x4+x5+x6)

- 2 terms with x3x4 but no x5 or x6 are on line 18: (x1+x2)x3x4

- 5 terms ending in x3x5 or x4x5 are calculated on line 24-25: (x1+x2)x3x5 +(x1+x2+x3)x4x5

The Clang version multiplied four variables directly together: x1 * x2, x1 * x4, x2 * x4, and x3 * x4. This GCC version manages to avoid those "inefficient" lone multiplications entirely, although x1 * x2 * (x3+x4+x5+x6) and (x1+x2) * x3 * x4 could be calculated in either order, so apples-to-apples there is a net of two fewer multiplications.

I'm not sure if this is the best we can do. The elementary symmetric functions can be rewritten in terms of power functions, but computing the sum of 1st through 6th powers on the integers here will be a lot of multiplications (though it might parallelize.) Real-world references are typically concerned with performing the computation on floating-point numbers, where stability takes precedence over raw performance.